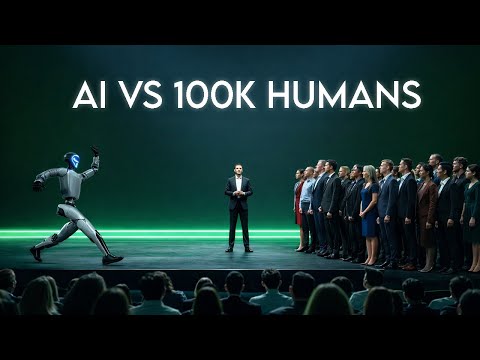

For decades, creativity was considered AI's final frontier—the one domain where machines could never match human ingenuity. That assumption just cracked. A study published January 21, 2026 in Scientific Reports tested 100,000 humans against nine leading AI systems on standardized creativity measures. GPT-4 outscored the typical human participant. Google's GeminiPro matched average human performance.

The research marks the largest direct comparison ever conducted between human and machine creativity. But the findings carry a crucial caveat: when researchers isolated the top 10% of human performers, every AI system fell short. The most creative humans still operate on a different level entirely—one that current language models cannot reach. This suggests AI may democratize baseline creativity while leaving exceptional creative ability as a distinctly human trait.