DARPA SyNAPSE Program (2008-2014)

November 2008 - August 2014What Happened

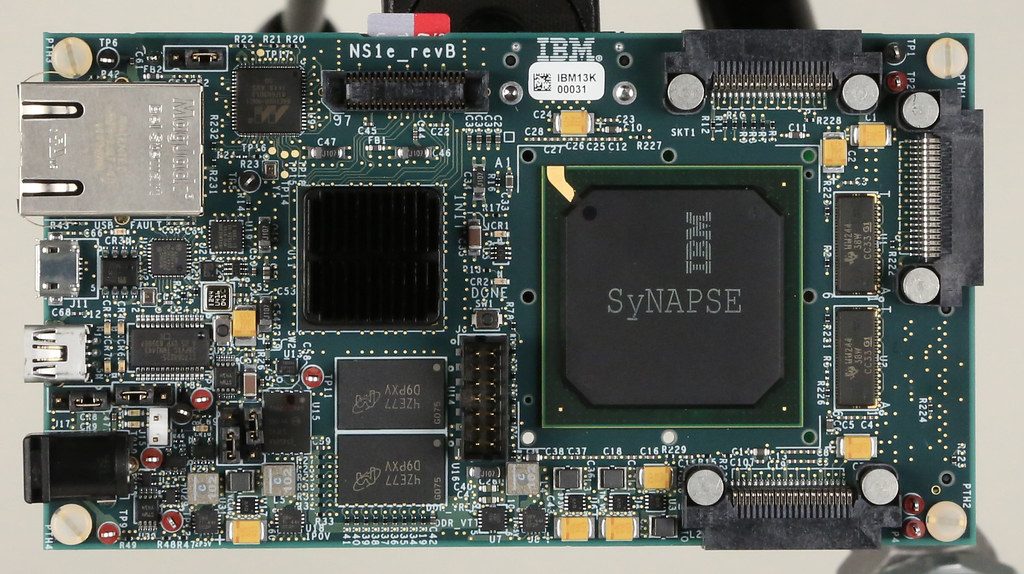

DARPA invested $52 million to develop brain-inspired computing hardware, partnering with IBM, HRL Laboratories, and universities. IBM's team built successive prototypes, culminating in the TrueNorth chip with 1 million neurons on a single die consuming just 70 milliwatts—about one ten-thousandth the power density of conventional processors.

Outcome

TrueNorth demonstrated that neuromorphic hardware could achieve radically better energy efficiency for specific tasks like image recognition.

The program established neuromorphic computing as a serious research field, leading Intel, Samsung, and others to launch competing efforts.

Why It's Relevant Today

Sandia's NeuroFEM breakthrough builds directly on hardware that descended from SyNAPSE—Intel's Loihi architecture emerged partly in response to TrueNorth's success.