Pakistan YouTube Hijacking (2008)

February 2008What Happened

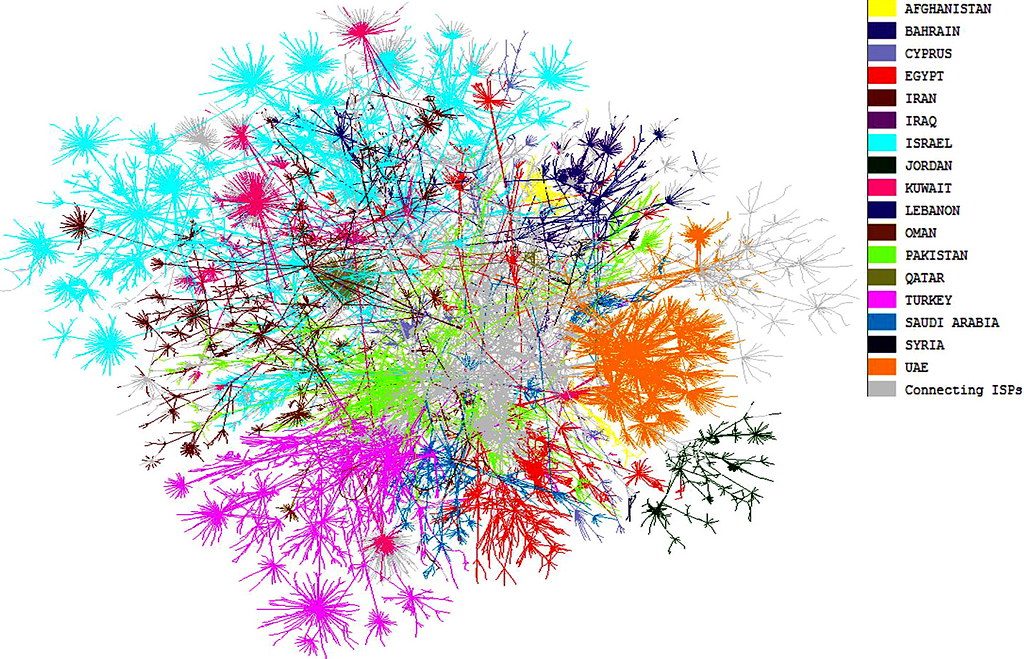

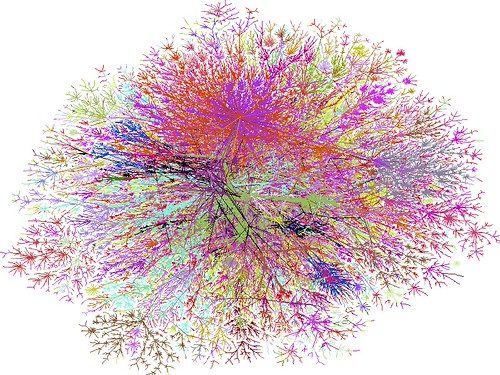

Pakistan Telecom attempted to block YouTube domestically by advertising false BGP routes. Its upstream provider PCCW Global propagated these routes globally, causing YouTube traffic worldwide to be misdirected to Pakistan. YouTube was unreachable for approximately two hours until the erroneous routes were withdrawn.

Outcome

YouTube traffic gradually recovered over two hours as correct routes propagated. The incident prompted calls for BGP security improvements.

The incident became a foundational case study for internet routing vulnerabilities. It accelerated work on Resource Public Key Infrastructure (RPKI), though adoption has remained incomplete nearly two decades later.

Why It's Relevant Today

The 2008 incident demonstrated that BGP's trust-based architecture makes the entire internet vulnerable to local misconfigurations. The February 2026 Cloudflare outage shows this fundamental vulnerability persists, now amplified by traffic concentration through a handful of major providers.